THREADS AND CONCURRENCY

A thread is a basic unit of execution. A process has one or more threads carrying out specific tasks. A process is a program under execution. It is an instance of an application software currently running in memory. A thread is a basic unit of CPU utilization. It is an entity within a process that is scheduled for execution to perform specific task. Threads allow processes to be able to execute on multiple CPUs at a time, and take advantage of the multi-core systems . A process performing a time consuming task can break down that task into smaller ones and assign different threads to perform each task in parallel. These threads execute simultaneously and at the end the results are combined resulting to the execution of the whole process. These threads communicate and cooperate with each other to share system resources like memory, I/O devices, code section, data section and files.

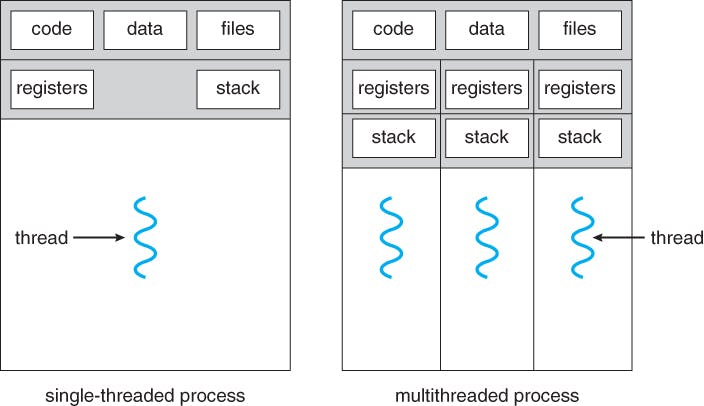

The image above shows the difference between a single threaded process and a multi threaded process. In a single threaded process, a single thread performs all the tasks, execute all the codes and has access to all the data and files associated with that process. A multithreaded process, has several threads that executes different parts of the process and cooperate with each other to share all the resources attached to that process. A multithreaded process can perform multiple tasks at the same time with each thread performing different tasks while a single threaded process can perform just one task at a particular time. Most recent programs take advantage of multithreading to efficiently and quickly deliver results to users. These programs have different processes running in the background, with each process been executed by multiple threads.

A thread consist of :

- A thread ID

- Program counter

- A register set

- A stack

Benefits of Multithreading

We have discussed some of the benefits of multithreading like speed and efficiency. Multithreading offers even more benefits as we are going to be discussing.

Parallelization: Multithreading allows different tasks to be performed on different threads in parallel. The fact that multiple tasks can be executed at the same time increases the speed at which a process is executed.

Specialization: Multithreading allows each thread to focus on a specialized task. Each thread is assigned a specific task and higher priority can be given to threads performing more important tasks.

Responsiveness : Multithreading allows an interactive application to keep been responsive to the user. The fact that one thread is blocked or is busy performing a time consuming task, does not stop other threads from responding to the user appropriately since the threads are all running in parallel.

Scalability : Multithreading makes a program easily scalable. More tasks can be assigned to a process and still be executed with efficiency, since those task can be shared among threads.

Resource sharing : Threads, by default share the resources and memory of the process they belong to. This allows an application to have several different threads of activity within the same address space. It is also economical because, new memory and resources will not have to be allocated to a thread on creation unlike processes. Threads already share the resources allocated to the process the belong to.

Concurrency

Concurrency is the ability of a program to effectively deal with multiple tasks at once. It is the ability of different parts of a program to be executed in parallel to create a final outcome. Concurrency improves the speed and efficiency of execution of programs and is usually achieved by multithreading.

Multithreading has become extremely important in terms of the efficiency of applications. With multiple threads and a single core, your application would have to transition back and forth between threads to give the illusion of multitasking. A core is a CPU’s processor, it is the brain of the CPU that receives instructions, and performs calculations, or operations, to satisfy those instructions. Modern CPUs come with multiple cores.

With multiple cores, applications can take advantage of the underlying hardware to run threads through a dedicated core, thus making your application more responsive and efficient. Multithreading basically allows you to take full advantage of your CPU and itsmultiple cores.

Basic Concepts in Multithreading

Locking

Locks are a very important feature that make multithreading possible. Locks are a synchronization technique used to limit access to a resource in an environment where there are many threads of execution. Each thread has to take turns to acquire the lock before they can access the resource. This is important as it helps to avoid the possibility of two threads trying to mutate or write to a resource at the same time. An example is mutex

Deadlocks

Deadlocks happen when two or more threads aren’t able continue executing because the resource required by the first thread is held by the second and the resource required by the second thread is held by the first. Deadlock happens when a thread enters the waiting state in order to access a resource held by another thread also in waiting state. Deadlocks can be prevent through lock ordering, lock time out, and deadlock detection

Race conditions

Race conditions happen when threads run through critical sections without thread synchronization. The threads “race” through the critical section to write or read shared resources and depending on the order in which threads finish the “race”, the program output changes. In a race condition, threads access shared resources or program variables that might be worked on by other threads at the same time causing the application data to be inconsistent.